The Apple Vision Pro: an accessability perspective

With the release of the Apple Vision Pro, the VR and AR space (I mean, Spatial Computing!) has found some new energy. But before these devices become mainstream, we ask ourselves whether this is a blessing or a curse for accessibility.

Accessibility is typically a first-class citizen in the Apple ecosystem and visionOS, Apple’s operating system for Spatial Computing, is no different. Even though it’s a brand new OS, there’s already a plethora of accessibility settings that should accommodate a wide variety of disabilities.

As always, the proof of the pudding is in the eating. So we set out to test the Vision Pro with Roel Van Gils. He’s a seasoned accessibility expert and co-founder at Eleven Ways, a Digital Accessibility Lab. Roel knows the importance of inclusive design like no other as he himself suffers from achromatopsia, a visual impairment that causes an overall reduced vision and an increased light sensitivity. Relying solely on your eyes for computing seems like a big leap…

It’s immediately clear this isn’t like upgrading to a new iPhone. It’s a completely new OS with lots of new tech, both hardware and software. This became painfully obvious when Roel tried to set up the Vision Pro. It’s a personal device like no other: it has to be adapted to your face, your eyes and your hands. Without proper calibration the magic simply isn’t present. And especially for people with a visual impairment, this is very important yet very challenging.

It just works. Right?

Even though VisionOS is completely new, it does come from the same factory in Cupertino that also builds MacOS, iOS and iPadOS. This makes the design language of the software feel familiar. But the same goes for the hardware: it’s undeniably Apple, unboxing experience included.

The biggest difference for VisionOS is the interaction. On a hardware level, that means you get a “top button” and a digital crown, similar to the Apple Watch. In software, you use a combination of hand and eye gestures. You select items by looking at them. For any new user this takes some time getting used to, but if you have a visual impairment even more so.

Roel mostly uses shortcuts on his laptop and rarely relies just on his vision to do the tasks he wants to do. The Vision Pro also offers some shortcuts but with so few hardware buttons, it takes a while to memorize the most important ones. Want to recenter your view? Long press the digital crown. Want to quickly do the eye et-up? Press the top button four times, or quadruple-click as Apple calls it. You can also access the accessibility settings directly by a mere triple-click.

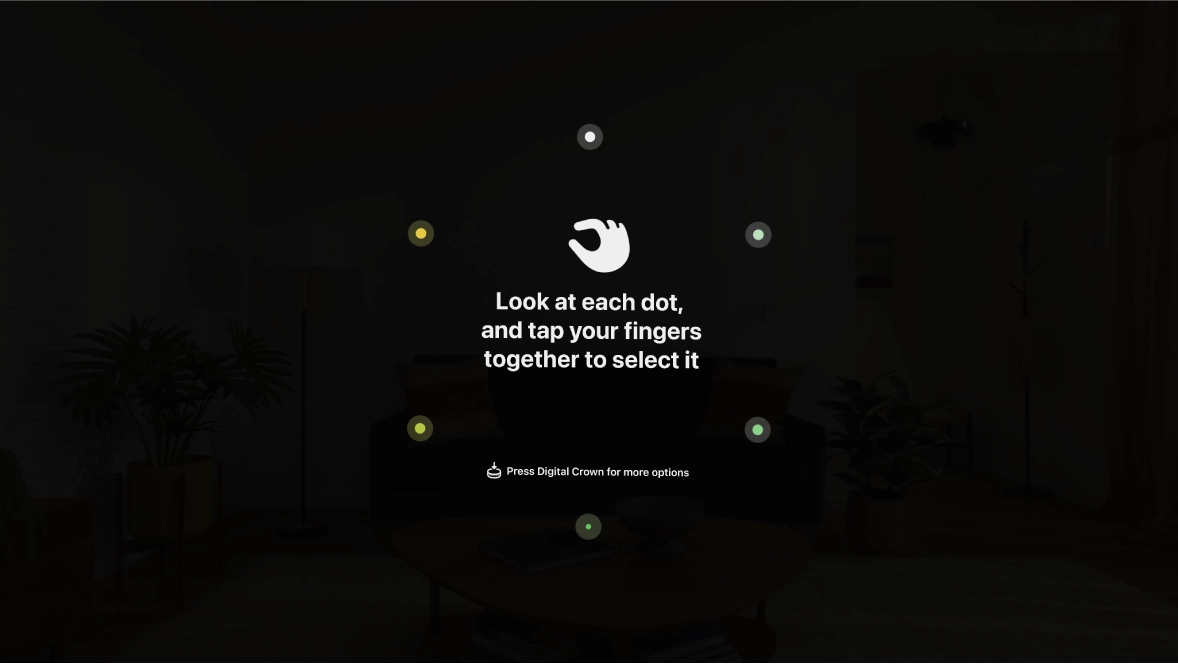

The eye and hand setup process helps you calibrate the device as well as teaches you how to use it. You see a circle of dots and need to select them one by one. But, you have to do it 3 times and each time the background gets brighter. By the third screen, the image was too bright for Roel so he needed to squint a bit. The set-up was completed and we got a “Eye set up unsuccessful” without any further explanation. Apple products are known for their brevity, often to the point it hurts the user experience when things don’t “just work”.

The solution here was to first set up the accessibility settings. Similarly to iOS, visionOS has the option to reduce the white point, a setting that reduces the intensity of bright colours.

The Vision Pro’s eye and hand set up.

Pass through, see better

Once the device was set up properly, the rest went a lot smoother. An often heard complaint is the low quality of the passthrough video, which Apple calls Immersion. Since the Vision Pro is fully opaque, you are seeing the world around you through a live camera feed shown on the Vision Pro’s tiny displays. The feed does have some noticeable motion blur and reduced color. For Roel however, having already reduced vision, this was barely noticeable and thus far less of an issue.

With the white point reduced, Roel could actually see better in a certain way. The live signal processing could automatically optimize what he sees, instead of having to constantly wear coloured lenses and put heavy tinted sunglasses on and off depending on the surrounding light. This type of augmented reality could be very promising for many types of visual impairments.

One step forward, two steps back?

So what does the future hold for spatial computing in terms of accessibility? is this it? Is the Vision Pro a blessing for accessibility? Unfortunately not. We’ll look back at it as an important step forward and a true feat of engineering but not one to leave a lasting impression. For that there are still some pieces of the puzzle missing. First of all the price needs to come down. At €4.000, this device is simply not affordable to most.

Second is the form factor. Anyone that tries on the Vision Pro for the first time is shocked by the weight. The premium materials as well as all the hardware required (cameras, screens, sensors) makes this a very heavy device. Too heavy to be comfortable for anything more than 15 minutes.

Another important element will be the integration of performant multi-modal AI models, some of which are already in the works. For those with severe visual impairments this will be a necessity. The combination with spatial audio holds many promises. If an AI can interpret what’s happening around you and explain it to you, this could bring independence to many people that currently require another pair of eyes for many tasks. Experimental AI products like Humane’s AI pin and the rabbit r1 make it painfully obvious the tech is simply not there yet to do this well. But, at the pace of development in AI, we can expect this to come sooner rather than later.

A last element would be to streamline importing your accessibility preferences and sharing them across devices. Within the Apple ecosystem this should simply be possible out of the box. After having set up everything on your iPhone or MacBook, you should be offered the option to take over these settings on your iPad and Vision Pro too. Even better would be a device agnostic system that encodes your preferences in a QR-code which you can scan on any new device. In terms of standardisation this would be a big step forward.